Electronic Management of Assessment at the White Rose Learning Technologists’ Forum

On Tuesday 21 April, we were delighted to host the most recent White Rose Learning Technologist Forum. Even more so as Lisa Gray and Lynnette Lall from Jisc were on hand to facilitate a workshop on Electronic Management of Assessment (EMA). Lisa has been a lead on the JISC EMA project which has undertaken extensive consultation across the UK and was part of a three-year study exploring technology-enhanced assessment and feedback. As well as identifying the challenges around EMA, the project aimed to gather examples of best practice, policies, processes and implementation. Ultimately, their aim is provide guidance and recommendations to both institutions and system providers on the current state of play and the way forward. Their recently published landscape report identified some broad trends, namely:

- there is lots of localised variation in how EMA is implemented but that is beginning to scale up;

- systems integration for EMA (e.g. SITS, Moodle, Turnitin) is uncommon and a key challenge

- business processes e.g. how EMA is implemented at departmental level are often very varied which is challenging to providing support, consistency of student experience, and systems integration.

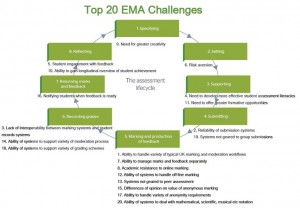

Lisa talked us through the main ‘pain points’ in the EMA process, which can be categorised according to the stages of the Assessment Lifecycle Model, developed by Manchester Metropolitan University, before we got hands-on in prioritising what we felt were the main challenges facing us in effective EMA (see my group’s contribution below!).

It was really interesting to hear the issues facing other institutions. Naturally, there was plenty of overlap – particularly on the frustrations presented by lack of systems’ interoperability – but it was also encouraging to note how far along York St John are in embedding electronic submission and marking into the processes of the University. The top challenges emerging from the groups were:

- the need for greater creativity and less risk aversion in the assignment setting stage;

- reliability and interoperability of systems;

- managing the efficient and smooth return of feedback;

- dealing with deviations from standard processes, e.g. moderation, external markers, mitigating circumstances etc.;

- and a lack of a longitudinal view of feedback for students and tutors.

Having prioritised the challenges in the landscape review, JISC have identified key priorities to take forward in the second phase of the project. These are

- EMA Requirements Map

- Feedback Hub

- EMA systems integration web resource

- Assessment & Feedback Toolkit

For the second part of the workshop, we were divided into three groups to discuss what we would like to see from JISC’s work in this area and how it could be applied, as well as what we thought we could contribute to that strand. Phil, Daniel and I attended a table each and summarised the conversations:

EMA Requirements Map

The aim of this project strand is to gather a clear picture of the key EMA requirements and workflows in form of general models that also capture the diversity of local practice. The purpose is to help the system suppliers to better support institutions’ practices and for institutions themselves to review their own workflows against best practice. Conversation initially focused on our processes for transferring grades from their first entry point (usually the VLE/LMS) to the student information system. Integration of the gradebook and the student information system was our top requirement, to which a common technical standard for assessment data, independent of one product or brand, was offered as a solution – a sort of EMA equivalent to SCORM or LTI for e-learning packages . So, we were surprised to discover from another member of the group that this already exists, LIS2, but it’s (obviously!) not widely used. Also required is a robust means of handling deviations from the standard submission, e.g. extended deadlines, mitigating circumstances, repeats etc. which cause headaches and spawn some weird and wonderful workarounds across universities. The University of Hull team are aiming to address some of these issues in their current pilot of development on the eSAM (Electronic Assessment, Submission and Marking) tool – an output of past JISC projects from Southampton and Northumbria Universities. If it works, it may be possible to release the code back into the community in the future. Finally, we felt there was an opportunity for us/universities to undertake some detailed process-mapping (as some already have) and, via digital storytelling, to provide an authentic voice to the project by describing our EMA processes and experiences. Ultimately, our core requirement was for JISC to use these to represent the UK sector to the main suppliers and raise the profile of our concerns.

Feedback Hub

The aim of this project is to deliver a study exploring the potential development of a Jisc-funded tool that would deliver an aggregated view of feedback and marks, with both tutor and student views. By examining some of the pedagogic, technical and process factors involved in implementing a feedback hub, it will inform the business case, identify the options for taking this work forward (with the cost/benefits discussed for each) and recommend the way forward that would offer most value to the UK HE and FE sectors.

This group discussion talked about the potential value of having an independent solution (outside of institutional VLE’s and student records systems) for presenting a longitudinal holistic view of student feedback and grades. One of the biggest concerns for having an external feedback hub was the divorcing of the feedback from the assessment – what is the value of the feedback if it’s not in context alongside the original assessment? The general consensus of the group was that a feedback hub would be a welcome addition if it’s designed and deployed to suit institutional needs. At York St John University, I can see a system of this nature supporting and enhancing the current academic tutorial process. It would allow tutors to easily see and monitor a student’s progress and feedback from the entire programme.

Assessment & Feedback Toolkit

The aim of this project is to deliver an interactive online toolkit, based around the assessment and feedback lifecycle, that will provide examples of effective practice at each stage of the lifecycle. The toolkit will be written in an action-oriented way, to enable response and action by the institutions involved and will include resources such as: tools; case studies; shorter vignettes of good practice; policies and processes; information on technologies and integrations.

On our table we discussed the potential requirements for such a toolkit, with the main requirement being the need for various ‘lenses’ on the Assessment Lifecycle (Academic, Admin, Technical, LT/Ed Dev, Student, Strategic/Senior Management, FE etc.) to ensure that it caters for a range of audiences. We also thought that the Toolkit should be based on pedagogy, and underpinned by research where appropriate, and draw together a range of resources available to help facilitate discussion & workshops around EMA & the Assessment Lifecycle (e.g. Viewpoints, TRAFFIC, MAC, REAP, etc.), as well as practical guides & case studies. The Toolkit should be appropriately licenced, so that it can be customised & developed, and designed in such a way that it minimises any barriers to staff wanting to contribute case studies & resources.

We rounded off the afternoon with contributions from a few attendees about the work they’re currently undertaking in relation to EMA, ranging from policy development and process improvement, to long-term projects with system providers to collaborate on and pilot improved system integrations. Overall, an enjoyable and informative afternoon. Thanks again to all of the attendees, and to Lisa and Lynette for facilitating.

You can find more detail on the project in Lisa’s slides and the resources below. If you are interested in contributing to the solutions phase of the project, you can find details of how to get involved in this JISC EMA blog post.

[gview file=”http://blog.yorksj.ac.uk/moodle/files/2016/04/JISCEMAslidesWRLTF.pptx”]

Roisin, Phil and Daniel.

Resources:

- JISC EMA project website: bit.ly/jisc-ema

- JISC EMA blog: ema.jiscinvolve.org

- Effective Assessment in a Digital Age [JISC Guide]: http://bit.ly/1HzuQWr