EUNIS e-Learning Task Force Workshop – Electronic Management of Assessment and Assessment Analytics

Last week I attended the European University Information Systems (EUNIS) e-Learning workshop on the ‘Electronic Management of Assessment and Assessment Analytics’, held at University of Abertay, Dundee. The aim of the workshop was to discuss the current challenges Higher Education institutions face regarding electronic assessment and explore how technology can enhance assessment and feedback activities, streamline administrative processes and make use of assessment data to improve student learning.

EMA: A lifecycle approach (Gill Ferrell, JISC UK)

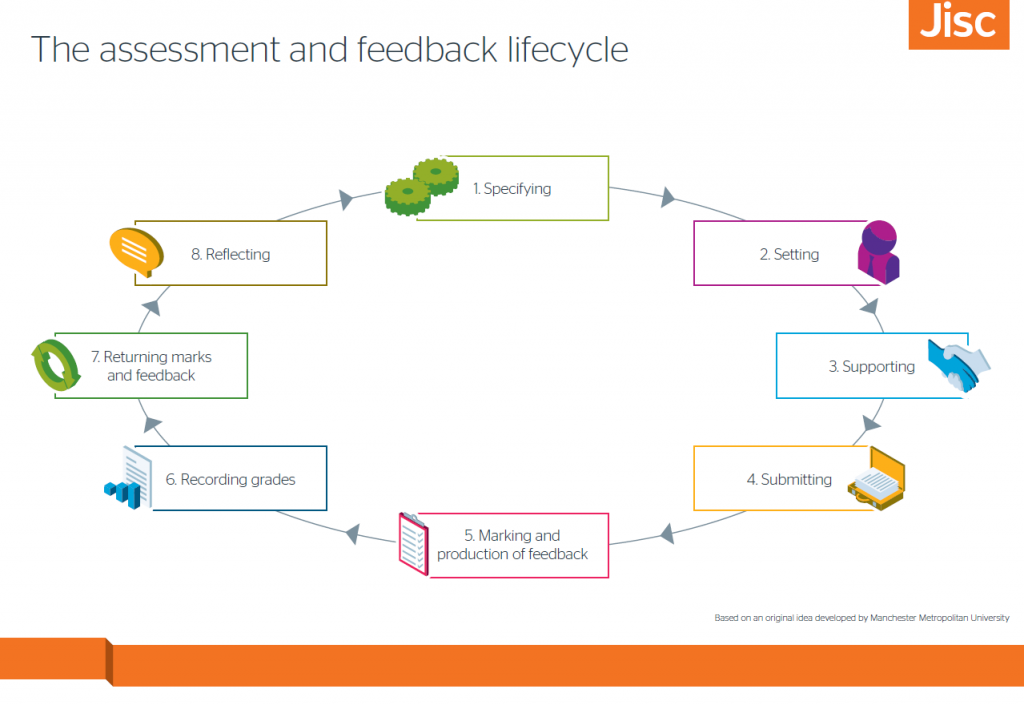

Gill Ferrell set the scene by introducing the Electronic Management of Assessment (EMA) Lifecycle developed by Manchester Metropolitan University and adapted by JISC.

On the back of completing a 3 year project exploring technology enhanced assessment feedback, Jisc identified several challenges institution faced with the adoption of EMA. These challenges included but were not limited to: staff resistance, system integration and the lack of interoperability (VLEs, student record systems etc.), non-standardised assessment processes and a lack of a longitudinal view of feedback for students and tutors.

Ultimately, JISC’s aim is to provide guidance and recommendations to both institutions and system providers on the current state of play and the way forward regarding EMA. For more information on the next stage of Jisc’s project read our blog post from the recent White Rose Learning Technologists’ Forum which focused on EMA and discussed some of these ideas.

Digital Assessment: A view from Northern Europe (Freddy Barstad, UNINETT eCampus, Norway)

Freddy introduced a national project in Norway that is looking at standardising digital assessment (in particular digital exams) across the sector. Facing many of the same challenges as identified by the JISC project they have developed a lifecycle with four stages: Preparation, Examination, Grading and Finalising.

Most of the 28 institutions involved in the project are currently using manual process for examinations. This project aims streamline existing processes and procedures by working smarter and digitally. The project is still in the early stages but it aims to share experiences with external vendors, develop best practice descriptors for digital assessment and establish the necessary infrastructure to enable and facilitate digital assessment. You can find out more about the project at: www.uninett.no/digitaleksamen

Assessing Online Learning: The TALOE project (Tona Radbolia, University of Zagreb, Croatia)

The TALOE (Time to Assess Learning Outcomes in e-Learning) project team have developed a web tool which can help academics decide which assessment strategy to deploy on their online modules. Highlighting the importance of aligning the assessment method to the modules desired learning outcomes, the TALOE web tool that suggests assessment options based on the modules learning outcomes.

The TALOE tool is available at: http://taloe.innovate4future.eu/ask-for-assessment-advice-2/. The first step is to enter the modules learning outcomes. If you’re new to writing learning objectives then the tool provides information and guidance on how to do this. It uses the ALOA (Aligning Learning Outcomes and Assessment) model which is based on the book: A taxonomy for learning, teaching and assessing: a revision of Bloom’s taxonomy of educational objectives.

The second step is to select up to three cognitive processes under the category tabs (Remember, Understand, Apply, Analyze, Evaluate, Create) which best describes your learning outcome. Once you’re done, simply click the ‘Check assessment methods’ button to find out the assessments methods which are most suited to your online module.

Further information about the project can be found at: http://taloe.up.pt

Hot Topics: System suppliers present their views on important trends

This session was the events sponsors’ opportunity to talk to the group about their systems and how they are addressing a developing and important trend in regards to assessment. Each supplier (Blackboard, Canvas, Brightspace, Digital Assess and PebblePad) had a 7 minute pitch to convince us to join them for a 20 minute session which focussed on their system in more detail. I took this opportunity to look at two systems I am unfamiliar with, Canvas and Digital Assess.

Canvas

The Canvas demonstration looked at how the VLE supports the different stages of the EMA lifecycle. I was particularly impressed by the system’s ‘speed marking’ feature. Similar to TurnItIn’s GradeMark it allows staff to mark online adding annotations and comments directly on to the student’s submission in addition to using a rubric or marking grid. Tutors can also add video feedback for each piece of student work. What’s really impressive about this feature is it can be used to start the reflective dialogue process between the student and tutor. The student has the ability to reply to the feedback with a video of their own. Due to time constraints and other pressures it’s not always possible for academics to meet with students individually to discuss their work. This approach (feature of the system) enables a digital way of doing this.

Another one of the things that stood out for me was the systems integration with other third party applications. For example, it’s very easy for a tutor to add a YouTube, Vimeo, TedEx video to an assignment and for the student to submit their work directly from Dropbox. Although, we have no immediate plans to move away from Moodle it was great to get an insight in what the other suppliers are offering.

Digital Assess

Digital Assess (previously TAG Assessment) is an adaptive comparative judgement system that allows a piece of students work to be assessed/judged multiple times by their peers. A grade for the work is then awarded based on a ranking system as a result of judgements for all the assessed work. Digital Assess enables learners to think critically about assessment task and gain a deeper understanding of assessment criteria.

Rethinking Assessment Practices (Lisa Gray, Jisc)

Lisa kicked proceedings off after lunch by discussing why assessment practices had to change and the role that technology can play to support this. She highlighted that there was growing consensus in the sector that developing judgement and self-regulation in learners as critical skills for life and employment was of upmost importance. Lisa suggested a ‘principled approach’ as a starting point for change in the design of assessment.

Transforming Assessment and Feedback (Rola Ajjawi, University of Dundee)

Rola Ajjawi’s presentation focussed on how the Medical Education team at the University of Dundee had remodelled the assessment and feedback process for an online distance learning programme (PG certificate in Medial Education). The major issues they wanted to address were the quality and quantity of feedback students were receiving, the timeliness of feedback – this often varied across the programme and the lack of dialogue in the process which resulted in both staff and student feeling isolated. Staff delivering the programme often wondered if student’s read the feedback, understood the feedback and whether they acted upon it.

As part of the transformation the team developed a set of education/feedback principles for the programme (based on the work of Nicol and Macfarlane-Dick). These were as follows:

- Feedback should be dialogic in nature

- Assessment design should afford opportunities for feedback to be used in future assignments

- Feedback should develop students’ evaluative judgements and monitoring of their own work

- Students should be empowered to seek feedback from different sources.

The revamped assessment process required students to complete a cover sheet which involved them evaluating the work against assessment criteria. They were also required to state how they had used feedback from a previous module to inform the development of the current assignment. Students also had the opportunity to request feedback on specific areas of the assignment from the tutor.

This kicked started the dialogic process between the student and tutor. Throughout the programme students were required to keep a reflective journal that all tutors had access to. This was done using the wiki tool in the institutions VLE. After receiving feedback on their assignment each student was required to answer the following questions in their reflective journal:

- How well does the tutor feedback match with your self-evaluation?

- What did you learn from the feedback process?

- What actions, if any will you take in response to the feedback process?

- What if anything is unclear about the tutor feedback?

Further feedback was then provided by the tutor. This was then carried forward into the programmes next module. The adoption of this approach led to students rethinking the role of feedback and changing their approach to the assignment. Rola also reported that student’s valued the opportunity to ask for specific feedback and that it helped close the feedback loop for that module in the programme. After the first iteration of this new approach, the Medical Education teaching team added an additional education principle: Feedback from learners should be used to improve teaching.

A more detailed account of the project can be found at: http://jiscdesignstudio.pbworks.com/w/page/50671082/InterACT%20Project

Student Engagement in the Assessment Practice (Nora Mogey, University of Edinburgh)

This session looked at how the University of Edinburgh are using Digital Assess (the adaptive comparative judgement tool) on there Edinburgh Award. Currently, the Edinburgh Award consists of 40 programmes involving 800 students. As part of the award students are asked to compile a reflective report of their experiences. A first draft of this report is then formatively peer assessed using Digital Assess. Students asked to provide one constructive comment and one critical comment on each the report. The final assessment then uses Digital Assesses peer ranking system.

Digital Assess is also being used on the Physics programmes at the University of Edinburgh for formative feedback on draft essay plans. As a result of this they found that middle grade students performed better in the final summative assessment. Feedback from the students using Digital Assess highlighted that they did learn from assessing the work of their peers. They could identify where they went wrong and got an insight into how others tackled the topic. Student assessment literacies was identified as an area for further development with students stating they are currently making their judgements on the quality of the writing. The students felt they didn’t have enough background knowledge to assess and that more support was required to help them inteperate the feedback they receive. However, this initiative did promote assessment for learning, develop assessment literacy skills and empowered student to take shared ownership of learning and assessment.

Discussion

In the discussion session we were asked to answer the question: How can we best support the development of student (and staff) assessment literacies. My table which was reduced in numbers at this point (due to the Norwegian contingent heading to the beer garden) started by talking about the importance of dialogue between staff and students in the assessment process. The key points from our discussion were as follows:

- Embrace students as change agents in assessment design – make student feel informed and part of the change and practice.

- Position students as partners in their education not consumers

- Help students to understand the value of the assessment – build core values into the programme

- Help students to understand the value of feedback from peers and not just the “academic experts”

- Embed formative assessment that tangibly prepares for summative: I.e. Understanding the assessment criteria

- Allow students to be involved in the process of creating their own assessment criteria. For example, encourage them to take ownership or being involved in the development of an assessment rubric.

- Look at the longitudinal view of assessment and feedback across the programme – build between modules and don’t treat assessment in isolation.

In the comments below, please share your thoughts or approaches to EMA? Are you doing anything different from those identified above? Are you facing different challenges? What innovative approaches are you adopting to enhance the student experience of assessment and feedback within a programme or module?

Daniel

Thank you for a very detailed post Daniel – I was impressed with the range of different discussions which you attended at the conference.

The use of third applications within the Canvas discussion was very interesting – whether it is more beneficial to allow learners to engage with existing technologies rather than introducing new ones for them to master will always be an interesting debate. Although insights are important, showing me possibilities I feel should always be accompanied by how to integrate into existing workflow – will Moodle allow integration from third person applications within assignments?

I was interested to know – how will your attendance impact on the recommendation for the use of technology in this area within the institution? Will any of the ideas from the conference be making an appearance within the TEL framework for the future?

Thanks for a great update!